FAQs on Predictive Policing and Bias

Last month Significance magazine published an article on the topic of predictive policing and police bias, which I co-authored with William Isaac. Since then, we’ve published a blogpost about it and fielded a few recurring questions. Here they are, along with our responses.

Last month Significance magazine published an article on the topic of predictive policing and police bias, which I co-authored with William Isaac. Since then, we’ve published a blogpost about it and fielded a few recurring questions. Here they are, along with our responses.

Do your findings still apply given that PredPol uses crime reports rather than arrests as training data?

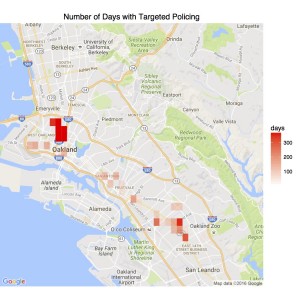

Because this article was meant for an audience that is not necessarily well-versed in criminal justice data and we were under a strict word limit, we simplified language in describing the data. The data we used is a version of the Oakland Police Department’s crime reports.[1] This data has been collected by members of OpenOakland.org since 2013 and contains data going back to 2007. The data used in this study was data from 2010-2011 to best match with the time period of data collection for both the Census data from which the synthetic population[2] was built and the NSDUH[3] data. This crime reports dataset includes all “incidents” of drug crime, which includes both arrests and reports of crime that did not result in an arrest or citation. This is, in fact, very similar to the type of data used in PredPol’s systems.

This question, however, misses the larger point of our argument. Whether a predictive model is run on arrest data, citizen reports, or a combination of both, the same problem remains — all of these datasets will likely be biased by different levels of crime documentation, across the city, and at each neighborhood, over time. The variation could result from different levels of police attention or from different levels of community reporting. Thus, the crux of our argument does not depend on whether we used the same exact data that PredPol uses. We are making a more abstract argument: any bias in the police data will, at best, not be ameliorated by machine learning algorithms. At worst, if policing allocations are made on the basis of biased predictions, the data and subsequent policing strategies will become increasingly biased.

We would also like to call attention to the logical conclusion of the suggestion that had we used all crime reports rather than just arrests, we would not have obtained this result. In order for that to be the case, one would have to believe that the set of all crime reports are representative of all crime, but arrests are highly biased (in this case, towards minority and low income communities). That is, the implication of the suggestion that we would not have had these same findings had we used crime reports is that while police know about crimes in all locations equally, they only arrest individuals in low income and minority neighborhoods for those same crimes.

The baseline against which you compare the police data is an estimate of the number of drug users who reside in each location. Because people do not always do drugs in their homes, this isn’t a fair comparison.

It is true that an assumption of our baseline comparison model is that drug crime occurs in proportion to the number of residents in an area that are drug users. While some drug use (and subsequent recorded crimes) occur in public spaces or areas away from the suspect’s primary residence, research suggests that the majority of drug use takes place within indoor spaces such as a private residence or in a social setting such as a party or nightclub, often to mitigate the possibility of being caught by the police[4],[5]. Given that the roughly 65% of incidents in the crime report data we used were related to drug possession as opposed to distribution or selling — which is less likely to occur near a person’s home — we believe that residence based estimates are a reasonable proxy for the distribution of drug crimes in Oakland.

Furthermore, our simulation demonstrates that if police find additional crime in the targeted locations, the algorithm will increasingly suggest those locations for increased police attention. This conclusion does not require that our estimates of the locations of drug crimes be accurate. Even under a scenario where representative crime data is available, applying the predictive policing algorithm will cause the data to become unrepresentative. This occurs whether or not our residence-based estimates paint a realistic picture of drug use.

PredPol does not make predictions about drug crime.

We have chosen to focus on predicting drug crimes specifically because there are other sources of information regarding drug use (i.e. public health-based surveys). This allows us to compare police data on drug crime to other, more representative information about drug use. The point of this analysis is to show that police records are statistically unrepresentative of where crimes occur, and reflect a mixture of societal and institutional factors. Numerous academic studies[6], [7] have noted large disparities in the enforcement of drug crimes — specifically, low-income neighborhoods with many residents of color experience high rates of enforcement — and thus tend to be overrepresented in crime report data. With this established, we explore how applying a predictive policing technique, such as PredPol, to this data would reinforce the statistical biases already present in the police data.

Our arguments are not specific to drug crime, nor are they specific to PredPol. We did not intend to single out PredPol in our analysis, and appreciate the authors for publishing their methodology in a peer-reviewed journal to be evaluated by other scholars. Rather, we are making a general point regarding the consequences of machine learning models applied to crime report records that do not correct for the non-representative data generation process. Any reasonable machine learning model applied to this data would have produced very similar predictions, so this is not an indictment of PredPol in particular.

Incidentally, the recent data challenge issued by the National Institute of Justice[8] specifically requires that participants make predictions of the locations of future drug crime on the basis of past police data. Even if PredPol or other predictive policing software is not currently used to predict drug crimes, predictive analytics for drug crime is in active development. We hope that our demonstration can contribute to the conversation surrounding the pitfalls of this endeavor.

[1] http://data.openoakland.org/dataset/crime-reports

[2] http://www.epimodels.org/drupal/?q=node/32

[3] https://nsduhwebesn.rti.org/respweb/homepage.cfm

[4] Dunlap, E., Johnson, B. D., & Benoit, E. (2005). Sessions, Cyphers, and Parties: Settings for Informal Social Controls of Blunt Smoking. Journal of Ethnicity in Substance Abuse, 4(3–4), 43–80.

[5] Mayock, P., Cronly, J., & Clatts, M. C. (2015). The Risk Environment of Heroin Use Initiation: Young Women, Intimate Partners, and “Drug Relationships”. Substance Use & Misuse, 50(6), 771–782.

[6] Beckett, K., Nyrop, K., & Pfingst, L. (2006). Race, Drugs, and Policing: Understanding Disparities in Drug Delivery Arrests*. Criminology, 44(1), 105–137.

[7] Golub, A., Johnson, B. D., & Dunlap, E. (2007). The Race/Ethnicity Disparity in Misdemeanor Marijuana Arrests in New York City. Criminology & Public Policy, 6(1), 131–164.

[8] http://www.nij.gov/funding/Pages/fy16-crime-forecasting-challenge.aspx